I’ve always been drawn to the trolley problem… not as a philosopher, but as an engineer. Engineers like to define parameters, identify metrics, and run the math. So I decided to treat the trolley problem like a design exercise: what would happen if we coded it into a decision system?

Problem:

The trolley problem is a classic thought experiment in ethics.

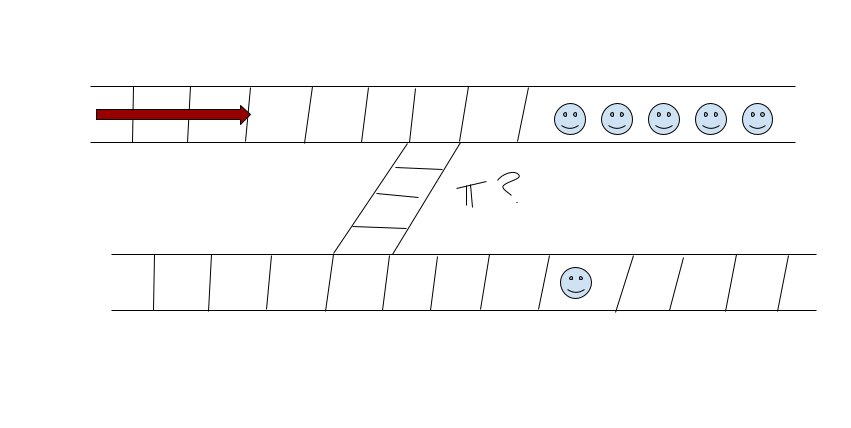

A runaway trolley is hurtling down the tracks. Ahead of it are five people tied to the track. If the trolley continues, all five will die. You’re standing next to a lever. If you pull it, the trolley will divert onto a side track, but there’s one person tied there.

So the choice is simple but brutal:

-

Do nothing → five die.

-

Pull the lever → one dies.

Philosophers use this setup to debate utilitarianism, morality of action vs inaction, and what it means to be responsible.

As an engineer, though, I wanted to see what happens if you try to “solve” it like a programming problem.

Do you swap the tracks?

Step 1: Add some engineering assumptions

Engineers like toggles and inputs. So I added one twist:

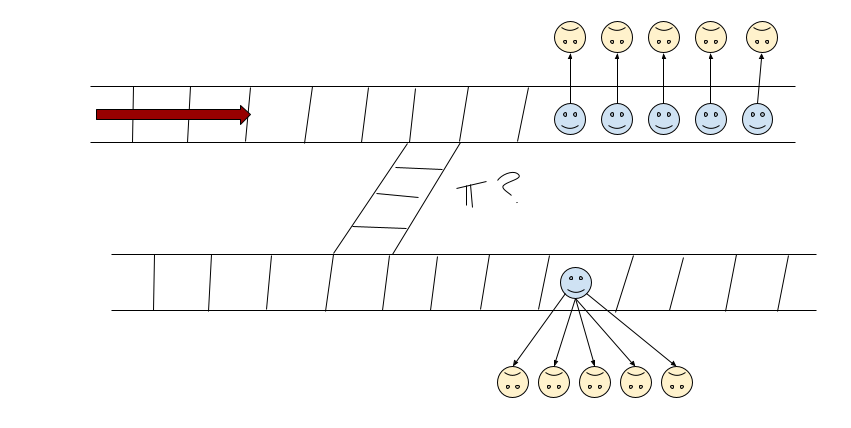

Imagine each of these people has been in a trolley scenario before. The five each chose to pull the lever (kill one to save many). The one person chose to do nothing (let many die).

Do we treat that history as relevant “bias,” or do we ignore it? That’s the kind of switch you’d want in a real decision system.

Step 2: Define objectives

A system needs a clear optimization goal. I tested three:

-

Minimize change in the universe (least disruption).

-

Maximize life (save the most).

-

Minimize death (kill the fewest).

Step 3: Run the logic

-

Maximize life / Minimize death

Easy: switching saves 5 instead of 1. Both metrics say pull the lever. -

Minimize change in the universe

This seems to point to pulling the lever (1 death < 5 deaths). But “change” is fuzzy. Are we measuring number of deaths? The moral weight of agency? Ripple effects? This is where the definition gets shaky. -

Bias toggle

If you use history, maybe the five “deserve” less protection because they previously sacrificed others. But that’s ethically dubious. Prior choices don’t necessarily determine the value of a life now. That feels more like karmic accounting than engineering.

Step 4: Where the framework works

-

Forces clarity: stating the metric (life, death, change) makes you define “best.”

-

Consistency check: in this case, all the metrics align → pull the lever.

-

Implementable: you could encode this into software, which is why people bring it up for AI and autonomous cars.

Step 5: Where it fails

-

Metrics aren’t neutral: “maximize life” already assumes utilitarian math is the right moral lens.

-

Bias is suspect: punishing people for their past choices is philosophically shaky.

-

Act vs omission ignored: many argue killing 1 is morally different than letting 5 die, even if numbers are the same.

-

Overgeneralization: real-world AI dilemmas involve uncertainty, probabilities, and laws — not neat 5 vs 1 tradeoffs.

Conclusion: Who decides?

When you run the trolley problem like an engineer, the answer looks simple: pull the lever. The math lines up, the code is clean, and the system is consistent.

But that’s the danger. Real ethical dilemmas are not engineering puzzles. Metrics are not neutral, tradeoffs are contested, and lives can’t be reduced to toggles and weightings.

That’s why engineers should not be the ones deciding the moral frameworks for autonomous systems. Our job is to make sure the system runs faithfully once those rules are defined. But the rules themselves need to come from a broader human input: ethicists, philosophers, communities, even public debate.

Otherwise, we’re not solving the trolley problem. We’re just hiding it inside code.

A real-world example: Tesla

Tesla’s Autopilot and Full Self-Driving systems show what happens when these choices aren’t surfaced. Behind the scenes, every time the car decides whether to brake, swerve, or prioritize occupants over pedestrians, it’s making moral tradeoffs. But those tradeoffs are hidden inside proprietary code and machine learning models, not debated openly.

Tesla markets its system aggressively, sometimes suggesting more autonomy than regulators say is safe. Accidents and near misses reveal that engineers have already embedded ethical decisions without telling society what those decisions are.

That’s exactly the danger: when the trolley problem is coded into cars, it doesn’t go away. It just gets locked into algorithms that the public never sees.

References / Further Reading

- Tesla’s Autopilot involved in 13 fatal crashes, U.S. safety regulator says. The Guardian (April 2024)

Highlights how U.S. regulators tied Tesla’s driver-assist systems to multiple deadly crashes, underscoring the real-world stakes of hidden decision-making. - List of Tesla Autopilot crashes. Wikipedia

A running catalog of incidents, investigations, and fatalities linked to Tesla’s Autopilot, showing patterns and scale. - The Ethical Implications: The ACM/IEEE-CS Software Engineering Code applied to Tesla’s “Autopilot” System. arXiv:1901.06244

Analyzes Tesla’s release and marketing practices against professional software engineering ethics standards. - Tesla’s Autopilot: Ethics and Tragedy. arXiv:2409.17380

A case study probing responsibility, accountability, and moral risk when Autopilot contributes to accidents.